NVIDIA Parakeet v2 vs OpenAI Whisper:

Top ASR Model Comparison

⏱ 12 min read | 🤖 AI Automation | 🎯 For Decision Makers & Leaders

Introduction: ASR model comparison

Automatic Speech Recognition (ASR) systems have evolved from simple transcription tools into mission-critical enterprise infrastructure. From call centers and media transcription to analytics, assistants, and multilingual applications, ASR model selection directly impacts cost, latency, and scalability.

This ASR model comparison evaluates NVIDIA Parakeet v2 and OpenAI Whisper, two widely adopted speech recognition models, across architecture, benchmarks, latency, throughput, deployment, licensing, and real-world production trade-offs.

At Logassa LLC, we analyze ASR models through a deployment-first lens, focusing on operational efficiency, system scalability, and long-term enterprise viability.

Architecture Overview: ASR model comparison

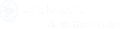

NVIDIA Parakeet v2

Parakeet v2 is built on a FastConformer encoder paired with a Token Duration Transducer (TDT) decoder.

This architecture enables:

- Extremely high GPU throughput

- Low-latency decoding

- Native word-level timestamps

By explicitly predicting token durations, the TDT decoder ensures stable alignment, making Parakeet highly reliable for subtitles, analytics, and time-sensitive.

OpenAI Whisper

Whisper uses a Transformer encoder–decoder architecture, trained end-to-end on massive multilingual datasets.

Key strengths include:

- Strong generalization

- Multilingual speech recognition

- Built-in translation capabilities

However, Whisper relies on autoregressive decoding, which introduces higher latency and lower throughput in enterprise-scale ASR model comparison scenarios

The Manual Reality (and Why It Doesn’t Scale)

Most integrity teams rely on spreadsheets, shared drives, emails and templates. The process works-but people become the integration layer.

Where time is lost

- Downloading and reformatting ILI data

- Reconciling mismatched IDs and locations

- Copying narratives into templates

- Chasing missing photos or sign-offs

The Result

- Inconsistent evidence packs

- Higher audit risk

- Slower response to regulator questions

- Knowledge locked in individuals

The Solution: A Pipeline Integrity Evidence-Pack Agent

Think of the agent as a pipeline integrity analyst assistant.

It reads, links, validates and assembles evidence-but never replaces engineering judgment.

What the AI Agent Does (and Does Not Do)

✅ What the Pipeline Integrity Evidence-Pack Agent Does

- Ingests ILI reports, dig packages, photos, work orders and repairs

- Links records by anomaly ID, location, asset context and time

- Generates a structured evidence pack.

- Gap-checks missing proof (photos, measurements, sign-offs)

- Summarizes decisions with citations to approved procedure

❌ Pipeline Integrity Evidence-Pack Agent Donts

- Approve repairs or override engineers

- Invent measurements or infer missing data

- Cite unapproved documents

- Hide uncertainty or low-confidence matches

Rule: No citation → no claim.

less time spent assembling packs (pilot target; measure baseline vs after)

fewer missing-attachment findings (goal via automated gap-checking)

traceability per anomaly (every claim tied to a record or source)

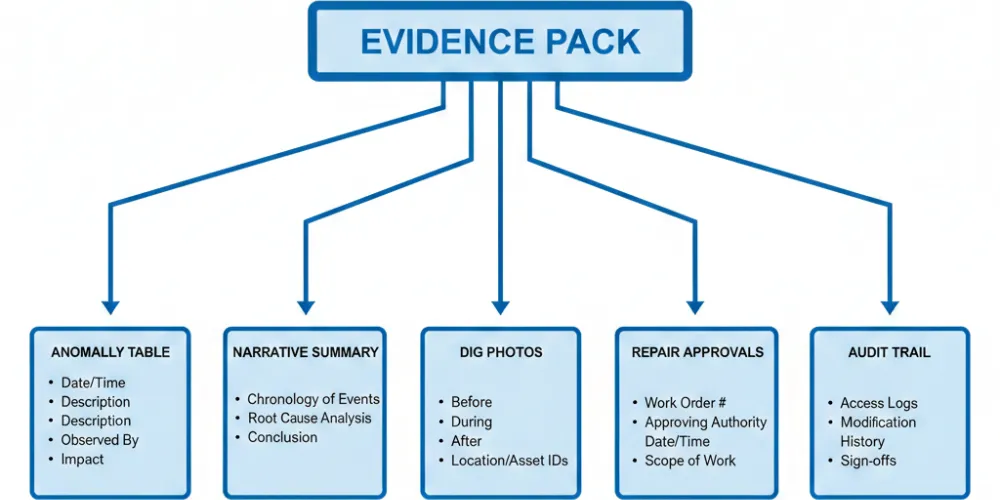

Real-World Implementation Flow: Pipeline Integrity Evidence-Pack Agent

ILI Run Arrives

Vendor delivers ILI report and anomaly list (PDF/CSV).

Single Source of Truth Created

Agent normalizes IDs, aligns GIS locations and creates one anomaly record per issue.

Digs Are Linked

Dig notes, measurements and photos are matched with confidence scoring.

Repairs & Close-Out Attached

Work orders, repair methods, post-repair checks and approvals are pulled in.

Evidence Pack Generated (Draft)

Includes:

Narrative summary

Anomaly table

“What we did and why”

Attachment checklist

Human Review & Approval (Mandatory)

Engineers validate, edit if needed and approve final packs.

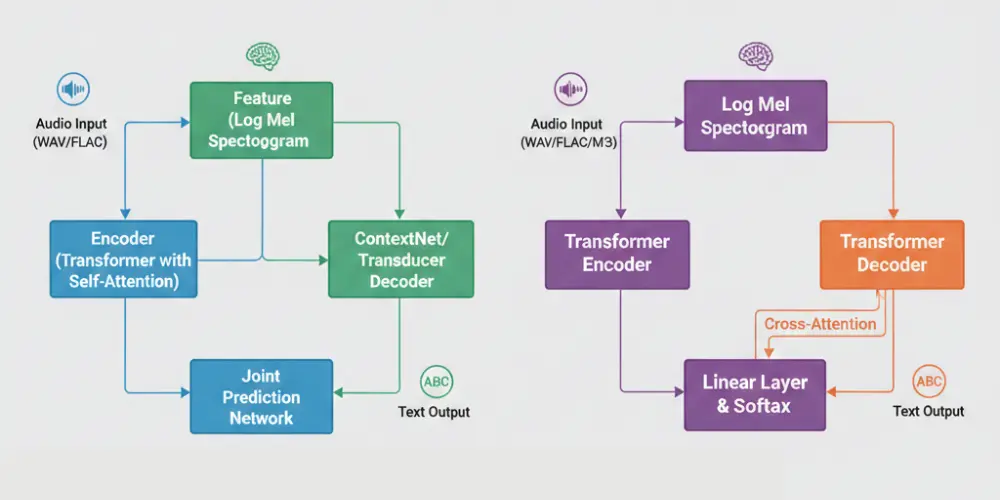

What a Complete Evidence Pack Includes?: Pipeline Integrity Evidence-Pack Agent

- ILI run context and definitions

- Anomaly prioritization rationale

- Dig verification data + photos

- Repair actions and work order references

- Validation, close-out summary and signatures

Common Gaps the Agent Flags Automatically: Pipeline Integrity Evidence-Pack Agent

- Location mismatches (ILI vs dig GPS/milepost)

- Missing photo evidence

- Incomplete measurements

- Missing repair close-out sign-offs

- Unclear deferment rationale

Implementation Blueprint (Agentic AI Pattern): Pipeline Integrity Evidence-Pack Agent

Data Inputs

- ILI reports and anomaly tables

- GIS pipeline routes

- Dig packages (notes, photos, measurements)

- CMMS work orders

- Internal procedures and standards

Agent Steps

- Normalize IDs, locations, timestamps

- Match anomalies to digs with confidence scoring

- Retrieve policies for citations (RAG)

- Validate completeness via rules engine

- Generate draft evidence pack + gap list

- Route to engineer for approval

Reference Technology Stack (Typical)

Data & Systems

- RAG with vector database

- Agent workflow orchestrator

- Document parsing (PDFs, images, metadata)

- Rules engine for completeness validation

AI Layer

- RAG with vector database

- Agent workflow orchestrator

- Document parsing (PDFs, images, metadata)

- Rules engine for completeness validation

Outputs

- Word/PDF evidence packs

- Attachment bundles

- Immutable audit logs and approvals

- Status dashboards

Pilot Metrics That Matter

Operational

- Time to assemble evidence pack

- Rework rate due to missing items

- Time from ILI receipt → review-ready pack

Quality

- Attachment completeness score

- Traceability score

- Mismatch detection rate

- Audit response time

Final Thought: Pipeline Integrity Evidence-Pack Agent

Pipeline Integrity Evidence-Pack Agent programs don’t fail due to lack of effort-they fail when proof is fragmented.

At Logassa Inc, our Pipeline Integrity Evidence-Pack Agents remove the paperwork burden while strengthening traceability, audit readiness and regulatory confidence-without compromising engineering authority.

👉 The best time to start was yesterday. The second-best time is today-with Logassa Inc and our advanced AI solutions.

Know more about our works with our Blogs. Happy Reading!